Open Neural Network Exchange

- Admin

- Jan 13, 2023

- 4 min read

By Dr Mabrouka Abuhmida

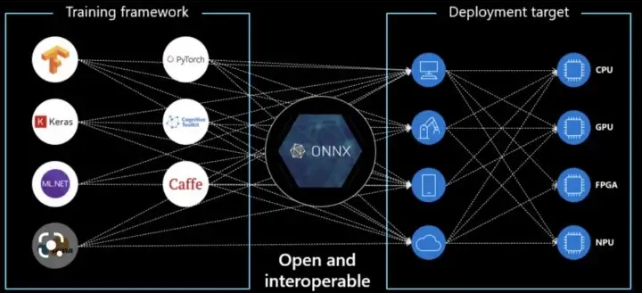

Open Neural Network Exchange (ONNX ) is a standard format for representing deep learning models. It was developed by Facebook and Microsoft in September 2017. It is an open format for representing deep learning models, which allows developers to move models between different frameworks using a single file format.

It simply allowss interoperability between different frameworks that support deep learning, such as PyTorch, TensorFlow, and CNTK.

ONNX allows developers to use the tools and frameworks that work best for them, without being constrained by the limitations of a particular framework. This can be particularly useful in a number of scenarios:

If a model was trained in one framework, but needs to be deployed in another framework for serving or deployment. ONNX allows developers to avoid having to retrain the model from scratch in the target framework.

If a model was trained using one framework, but needs to be fine-tuned using another framework. ONNX allows developers to leverage the strengths of multiple frameworks to improve the performance of their model.

If a team of researchers is working on a project and using different frameworks for experimentation. ONNX allows the team to share models and collaborate more easily, even if they are using different frameworks.

Overall, ONNX can help developers to be more productive and efficient, by enabling them to use the best tools and frameworks for each task, and by making it easier to share models and collaborate with others.

ONNX Runtime is an open-source inference engine for executing models that are trained using the ONNX format. It is designed to be performant, flexible, and platform-agnostic, making it a good choice for deploying ONNX models in a variety of environments.

ONNX Runtime can be used to perform inference on ONNX models in a number of ways. It can be called from a variety of programming languages, including C#, C++, Python, and Java, using APIs that are tailored to each language. It can also be used in a number of different environments, including on Windows, Linux, and macOS, and on both CPU and GPU hardware.

One of the key features of ONNX Runtime is its high performance. It is designed to be optimized for execution on a wide range of hardware, and can make use of specialized acceleration techniques such as tensorization and vectorization to achieve faster inference times.

To summarize the difference between ONNX and ONNX Runtime:

ONNX is a standard format for representing deep learning models, while ONNX Runtime is an inference engine for executing ONNX models.

ONNX allows developers to move models between different deep learning frameworks using a single file format, while ONNX Runtime allows developers to execute those models in a variety of environments.

ONNX is focused on model interoperability, while ONNX Runtime is focused on performance and flexibility.

Here is an example of how you might use ONNX to deploy a model trained using the Keras framework on an IoT device:

First, you will need to train your model using the Keras framework. This can be done using the Keras API, or by using a higher-level wrapper such as tf.keras (if you are using TensorFlow as the backend for Keras).

Once your model is trained, you will need to convert it to the ONNX format. This can be done using the onnxmltools package, which provides a set of functions for converting models trained in various frameworks to ONNX. To convert a Keras model to ONNX, you can use the convert_keras function, like this:

import onnxmltools

# Load the trained Keras model

model = keras.models.load_model("my_model.h5")

# Convert the model to ONNX

onnx_model = onnxmltools.convert_keras(model, target_opset=7)

# Save the ONNX model to a file

onnxmltools.utils.save_model(onnx_model, "my_model.onnx")Next, you will need to install ONNX Runtime on your IoT device. ONNX Runtime is available for a variety of platforms, including Linux, Windows, and macOS, so you will need to choose the version that is appropriate for your device. You can install ONNX Runtime using pip, like this:

pip install onnxruntimeOnce ONNX Runtime is installed, you can use it to execute the ONNX model on your IoT device. Here is an example of how you might do this using the Python API for ONNX Runtime:

import onnxruntime

# Load the ONNX model

session = onnxruntime.InferenceSession("my_model.onnx")

# Generate some input data

input_data = ...

# Run the model and get the output

outputs = session.run(None, {"input": input_data})

# Process the output

output = outputs[0]Finally, you can use the output of the model to drive your IoT application. For example, if the model is a classifier, you might use the predicted class labels to control the behavior of your device.

In summary, The goal of ONNX is to enable developers to use the tools and frameworks that work best for them, without being constrained by the limitations of a particular framework. By providing a standard way to represent models, ONNX makes it easier for developers to share models and collaborate with others, and to take advantage of the performance and feature improvements that are continuously being made in different deep learning frameworks.

ONNX has gained widespread adoption in the deep learning community, and is supported by a large number of tools and frameworks, including PyTorch, TensorFlow, CNTK, and many others. It is an active open-source project with contributions from developers at various companies and organisations.

Comments